|

lycium

|

|

« Reply #30 on: March 28, 2007, 05:21:11 AM » |

|

How much more precision do you think I'd gain if I refactored my code to calculate x and y position each time? lazy! test it out, that's < 1 line of code. or you could do a little thought experiment: how often have your x/y values been subjected to roundoff errors in the further reaches of your image (depending on how you split it)? Actually, it's a design change to my app. It's built on passing step values to the plot code. design change? you will still use those very same step values! i guarantee you that the "snapping" code you have, besides precluding supersampling by the sound of things, is a zillion times slower than just 2 extra multiplies.

I think not. My "snap to grid" logic takes place ONCE, at the beginning of a plot, and involves a half-dozen floating point calculations, once. 2 multiplies/pixel is a lot more calculations than a half-dozen calcuations for the whole plot. Also, my snap to grid code lets me recognize symmetric areas of my plot and skip ALL the calculations for symmetric areas, and instead just copy the pixels. That saves potentially millions of floating point calculations. hmm i don't really understand the snapping then, but what especially enigmatic to me is your investment in symmetry exploitation... 50% at best (i.e. the centred mandelbrot and julia we all have burnt into our retinas from the 80s) is small fish and with any degree of zooming you can basically call it 0%. |

|

|

|

|

Logged

Logged

|

|

|

|

|

cKleinhuis

|

|

« Reply #31 on: March 28, 2007, 08:18:35 AM » |

|

is small fish and with any degree of zooming you can basically call it 0%.) i must agree to that, beside of that, even optimization based on rectangles with same edge color leads to nothing if you use another coloring method than iteration->colorindex |

|

|

|

|

Logged

Logged

|

---

divide and conquer - iterate and rule - chaos is No random!

|

|

|

|

Duncan C

|

|

« Reply #32 on: March 28, 2007, 03:36:56 PM » |

|

<Quoted Image Removed>

i must agree to that, beside of that, even optimization based on rectangles with same edge color leads to nothing if you use another coloring method than iteration->colorindex

My program is based on iteration counts and color tables. I calculate the iteration values for a plot, and then map iteration values to colors for the points in the plot. I map iteration values to colors at display time. My program allows me to go back and use DIFFERENT colors at any time, in near real time. Changing colors on an on-screen plot makes for interesting animations. Colors are only used for display. The boundary following algorithm I am planning to use will trace contiguous areas with the same ITERATION VALUE, not color. It's true that this approach will not be useful for other methods of coloring a plot, like using fractional escape values. Duncan C |

|

|

|

|

Logged

Logged

|

Regards,

Duncan C

|

|

|

|

Duncan C

|

|

« Reply #33 on: March 28, 2007, 03:58:28 PM » |

|

How much more precision do you think I'd gain if I refactored my code to calculate x and y position each time? lazy! test it out, that's < 1 line of code. or you could do a little thought experiment: how often have your x/y values been subjected to roundoff errors in the further reaches of your image (depending on how you split it)? Actually, it's a design change to my app. It's built on passing step values to the plot code. design change? you will still use those very same step values! Thats not how I understood the original posters comments. The way I understood it, the step values were the problem. As the code handles plots at higher and higher magnifications, the step values get very small, and start to have rounding errors. I got the impression that he was suggesting rewriting the plot code to caluclate the x y position of each point as complex_x_coord = min_complex_x + (pixel_x/total_x_pixels) * x_width. complex_y_coord = min_complex_y + (pixel_y/total_y_pixels) * y_height. That way each x and y position would be calculated directly, rather than based on a step value. I guess multiplying the pixel coordinates by the step value would give less rounding errors than adding the step value to each previous coordainte, but it seems to me that getting rid of the step value entirely would give the most accurate results. i guarantee you that the "snapping" code you have, besides precluding supersampling by the sound of things, is a zillion times slower than just 2 extra multiplies.

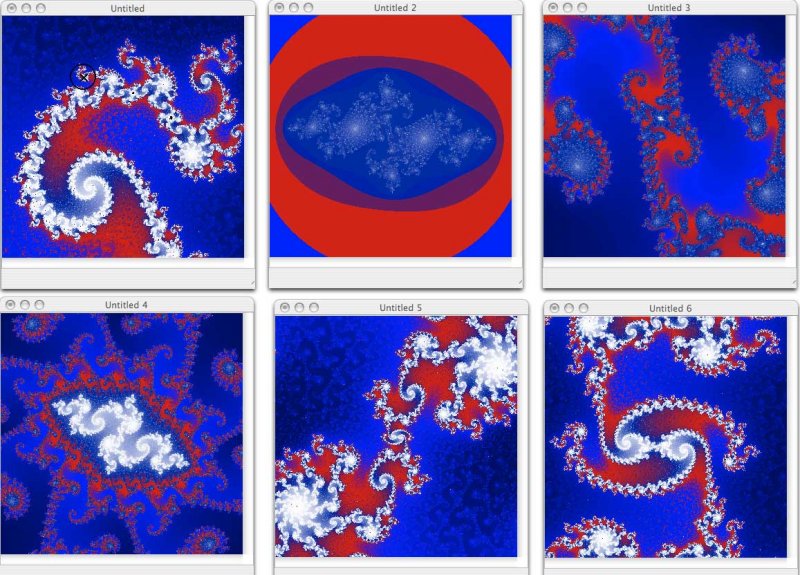

I think not. My "snap to grid" logic takes place ONCE, at the beginning of a plot, and involves a half-dozen floating point calculations, once. 2 multiplies/pixel is a lot more calculations than a half-dozen calcuations for the whole plot. Also, my snap to grid code lets me recognize symmetric areas of my plot and skip ALL the calculations for symmetric areas, and instead just copy the pixels. That saves potentially millions of floating point calculations. hmm i don't really understand the snapping then, but what especially enigmatic to me is your investment in symmetry exploitation... 50% at best (i.e. the centred mandelbrot and julia we all have burnt into our retinas from the 80s) is small fish and with any degree of zooming you can basically call it 0%. For Mandelbrot plots, symmetry isn't all that useful, since most of the interesting areas are well off the x axis. For Julias, however, it has real benifit. I find the symmetry of Julia sets to be part of their visual appeal. As mentioned in a previous post, I've been investigating the way Julia sets have structure on several different scales depending on the source Mandelbrot point's location on several different scales. For this type of investigation, I zoom in on the center of a Julia plot repeatedly. All the resulting plots are symmetric, and mapping symmetric areas and copying them doubles the plot speed. I think a 100% speed improvement is worth the effort. I'll post a screen shot from my application that shows what I'm talking about later today if I get a chance. Duncan C |

|

|

|

|

Logged

Logged

|

Regards,

Duncan C

|

|

|

|

David Makin

|

|

« Reply #34 on: March 28, 2007, 04:43:34 PM » |

|

[Thats not how I understood the original posters comments. The way I understood it, the step values were the problem. As the code handles plots at higher and higher magnifications, the step values get very small, and start to have rounding errors. I got the impression that he was suggesting rewriting the plot code to caluclate the x y position of each point as

complex_x_coord = min_complex_x + (pixel_x/total_x_pixels) * x_width.

complex_y_coord = min_complex_y + (pixel_y/total_y_pixels) * y_height.

But surely your delta/step values are precalculated as x_width/total_x_pixels and y_height/total_y_pixels in any case (that's what lycium meant by "the very same step values") you only add the slight overhead of 1 multiply per outer loop and 1 per inner loop which is not much extra for the relevant increase in accuracy considering the speed of FPU multiplies nowadays (it should give you around an extra *100 magnification). |

|

|

|

|

Logged

Logged

|

|

|

|

|

Duncan C

|

|

« Reply #35 on: March 28, 2007, 10:18:35 PM » |

|

[Thats not how I understood the original posters comments. The way I understood it, the step values were the problem. As the code handles plots at higher and higher magnifications, the step values get very small, and start to have rounding errors. I got the impression that he was suggesting rewriting the plot code to caluclate the x y position of each point as

complex_x_coord = min_complex_x + (pixel_x/total_x_pixels) * x_width.

complex_y_coord = min_complex_y + (pixel_y/total_y_pixels) * y_height.

But surely your delta/step values are precalculated as x_width/total_x_pixels and y_height/total_y_pixels in any case (that's what lycium meant by "the very same step values") you only add the slight overhead of 1 multiply per outer loop and 1 per inner loop which is not much extra for the relevant increase in accuracy considering the speed of FPU multiplies nowadays (it should give you around an extra *100 magnification). Is it the repetitive addition that leads to errors, or is the small scale of the delta value? My thinking is that the method that would lead to the LEAST error is what I posted, calculating the x and y coordinates directly, rather than multiplying by a precalculated delta value. I'm up to my elbows in other code changes right now. When I get a chance I'll do some testing. Duncan C |

|

|

|

|

Logged

Logged

|

Regards,

Duncan C

|

|

|

|

Duncan C

|

|

« Reply #36 on: March 28, 2007, 10:33:32 PM » |

|

I posted an image on my pBase account that shows what I am talking about with Julia sets having different structure at different scales, refelecting the morphology of the Mandelbrot set at different scales. Click the link below to see the full sized image, along with an explanation of the plot:  click to see the larger "original" version on pbase. click to see the larger "original" version on pbase. Duncan C |

|

|

|

« Last Edit: March 29, 2007, 11:59:15 PM by Nahee_Enterprises »

|

Logged

Logged

|

Regards,

Duncan C

|

|

|

|

David Makin

|

|

« Reply #37 on: March 29, 2007, 01:21:07 PM » |

|

When using plain delta values (i.e. without the extra multiplies) it's the repetitive add that causes the rounding errors.

For example if the real value is 1.0 but the delta is 0.000000000001234556 then some of the 123456 will get lost on each addition (i.e. the error is incremental) but if you use basevalue+numpixels*delta e.g. 1.0 + numpixels*0.000000000001234556 then this rounding error is reduced (it is no longer incremental). There's still a rounding error but it allows zooming to an extra *100 or so.

|

|

|

|

|

Logged

Logged

|

|

|

|

|

Duncan C

|

|

« Reply #38 on: March 29, 2007, 01:46:33 PM » |

|

When using plain delta values (i.e. without the extra multiplies) it's the repetitive add that causes the rounding errors.

For example if the real value is 1.0 but the delta is 0.000000000001234556 then some of the 123456 will get lost on each addition (i.e. the error is incremental) but if you use basevalue+numpixels*delta e.g. 1.0 + numpixels*0.000000000001234556 then this rounding error is reduced (it is no longer incremental). There's still a rounding error but it allows zooming to an extra *100 or so.

That makes sense. Thanks for the clarification. Don't you think I would gain addional precision if I didn't use a delta value at all, but instead calculated my x and y position for each pixel position? Duncan C |

|

|

|

|

Logged

Logged

|

Regards,

Duncan C

|

|

|

|

David Makin

|

|

« Reply #39 on: March 29, 2007, 06:44:41 PM » |

|

No there's no point doing the divde to calculate the deltas within the loops - the extra precision friom doing that would be negligible, if any, and divides are considerably slower than multiply or add.

|

|

|

|

|

Logged

Logged

|

|

|

|

|

lycium

|

|

« Reply #40 on: March 30, 2007, 11:40:05 AM » |

|

No there's no point doing the divde to calculate the deltas within the loops - the extra precision friom doing that would be negligible, if any, and divides are considerably slower than multiply or add.

correct, by a factor of 200 or more, ESPECIALLY in double precision. that's the big kicker duncan: you're willing to sacrifice a ton of speed to get that extra accuracy, but then you squander it on cumulative roundoff error! try this: time how long it takes to add 1/x x times to an accumulating variable, and see how far off the answer gets from 1 as x increases. then time how long it takes you to do x multiplies, and finally time how long x divides take... a quote from donald knuth is appropriate here: "We should forget about small efficiencies, say about 97% of the time: premature optimization is the root of all evil." (emphasis mine) i've said several times that you're spending your time in the wrong places. i bet there are speedups of 10x or more lurking in your program if you're not aware of basics like constant optimisation, effective use of reciprocals, threading principles, etc. spending time on this symmetry stuff* and worrying about the (neglible) speed difference between an add and a multiply, particularly at an extreme precision cost, is holding you back: rather learn to write tight generic code than desperately try to speed up poor algorithms. * that's REALLY clutching at straws, and if you can't convince yourself of that you have good reason for despair. your speedup is directly proportional to the boringness of the resulting image, up to a maximum of only 2x (cf. with the 200x mentioned above!), and as soon as you look away from the real axis it slows down. even if you're obsessive about that real axis, i don't think all your users (still considering going commercial?) will be. |

|

|

|

« Last Edit: March 30, 2007, 11:57:44 AM by lycium »

|

Logged

Logged

|

|

|

|

|

gandreas

|

|

« Reply #41 on: March 30, 2007, 04:45:19 PM » |

|

a quote from donald knuth is appropriate here: "We should forget about small efficiencies, say about 97% of the time: premature optimization is the root of all evil." (emphasis mine)

And the corollary to this is "The biggest performance optimization is going from not working to working" After all, getting the wrong answer really fast isn't exactly useful... |

|

|

|

|

Logged

Logged

|

|

|

|

|

Duncan C

|

|

« Reply #42 on: April 02, 2007, 06:10:59 AM » |

|

No there's no point doing the divde to calculate the deltas within the loops - the extra precision friom doing that would be negligible, if any, and divides are considerably slower than multiply or add.

correct, by a factor of 200 or more, ESPECIALLY in double precision. that's the big kicker duncan: you're willing to sacrifice a ton of speed to get that extra accuracy, but then you squander it on cumulative roundoff error! try this: time how long it takes to add 1/x x times to an accumulating variable, and see how far off the answer gets from 1 as x increases. then time how long it takes you to do x multiplies, and finally time how long x divides take... a quote from donald knuth is appropriate here: "We should forget about small efficiencies, say about 97% of the time: premature optimization is the root of all evil." (emphasis mine) i've said several times that you're spending your time in the wrong places. i bet there are speedups of 10x or more lurking in your program if you're not aware of basics like constant optimisation, effective use of reciprocals, threading principles, etc. spending time on this symmetry stuff* and worrying about the (neglible) speed difference between an add and a multiply, particularly at an extreme precision cost, is holding you back: rather learn to write tight generic code than desperately try to speed up poor algorithms. * that's REALLY clutching at straws, and if you can't convince yourself of that you have good reason for despair. your speedup is directly proportional to the boringness of the resulting image, up to a maximum of only 2x (cf. with the 200x mentioned above!), and as soon as you look away from the real axis it slows down. even if you're obsessive about that real axis, i don't think all your users (still considering going commercial?) will be. lycium, You lay it on a bit thick, don't you think? How about you lighten up a little. I thought this was a friendly discussion, not an inquisition. I've got over 25 years of commercial software development experience, and am able to pick and choose the work I do as a result of my commercial success. Please don't preach, okay? You and David are probably right that the small increase in accuracy from doing a divide to calculate the coordinates of each pixel would not be worth the performance cost. Remember, though, that we are talking about one divide per pixel, (plus one divide for each row) not a divide for each iteration. I adjusted my code so that I don't do repetitive adds any more, and it improved things slightly. My images don't get distorted (skewed to one side due to roundoff errors) any more, but I am still seeing artifacts from computation error at the same magnification. I haven't done rigorous testing, but the changes seem to keep the images from completely falling apart for about another 5x magnification. Thanks, David, for the suggestion. I miscounted by the way. My images are showing artifacts at a width of around 4E-15, not -14. You're right that for Mandelbrot plots, the most interesting images are not on the real axis. However, I feel that the symmetry of Julia sets is part of their appeal. Further, there are different structures in Julia sets at a variety of different scales, but those structures appear centered on the origin. See my post of a few days ago, which included a set of images showing what I am talking about. (or click here.) Duncan C |

|

|

|

|

Logged

Logged

|

Regards,

Duncan C

|

|

|

|

lycium

|

|

« Reply #43 on: April 02, 2007, 04:02:27 PM » |

|

You lay it on a bit thick, don't you think?

fair enough, the bit about despairing wasn't nice; i was frustrated that you left alone the obvious point which trifox and i were making about the relative utility of the symmetry optimisation. How about you lighten up a little.

that you're telling me that certainly did lighten me up, lol! you also talk about me preaching: I've got over 25 years of commercial software development experience, and am able to pick and choose the work I do as a result of my commercial success. good for you; being highly qualified ought to speak for itself. Please don't preach, okay?

facts are facts, i'm not preaching; if you dispute something i said, please tell let me know - we're all here to learn. note that i'm not making this stuff up for sport, it's for your benefit - feel free to tell me if you'd rather not hear it. that's really all i'd like (but not have) to say on this matter. |

|

|

|

« Last Edit: April 02, 2007, 04:36:39 PM by lycium »

|

Logged

Logged

|

|

|

|

|